Advanced Configuration¶

The following documentation provides guidelines to configure various aspects of OctoPerf On Premise Infra, like:

- System Settings: setup docker behind a proxy, Elasticsearch system configuration etc.,

- Mailing: support resetting account password via email,

- Storage: store resources (recorded requests/responses and more) either on local disk or Amazon S3,

- High Availability: HA is supported via built-in Hazelcast clustering.

OctoPerf EE is a Spring Boot application which uses YML configuration files.

Docker Proxy Settings¶

Docker can run from behind a proxy if required. The procedure is detailed in Docker Documentation.

Elasticsearch¶

Elasticsearch Database requires the systemctl vm.max_map_count to be set at least to 262144:

sysctl -w vm.max_map_count=262144

To set this value permanently, update the vm.max_map_count setting in /etc/sysctl.conf. To verify after rebooting, run sysctl vm.max_map_count.

See Elasticsearch VM Max Map Count for more information.

Custom Application YML¶

To define your own configuration settings, you must edit the application.yml provided in config/ folder.

Custom Application YML

- Stop OctoPerf EE with the command

make clean(data is preserved) - Edit

config/application.ymlfile provided in OctoPerf EE Setup, - Start OctoPerf EE with the command

make.

The configuration defined in your own application.yml takes precedence over the default built-in configuration.

Info

Please refer to Spring boot documentation for general settings. (such as file upload limit)

Warning

The application.yml file must be indented with 2 spaces for each level.

Environment Variables¶

When you need to simply override a single setting, defining an environment variable when launching the OctoPerf EE container is the good way to go.

How to define an environment variable

- Edit docker-compose.yml provided in OctoPerf EE Setup,

- locate

environment:section and add relevant environment variables, - Restart the application using docker compose.

Environment variables take precedence over settings defined in YML configuration files.

Custom Settings¶

Advertised Server¶

The backend advertises itself using server settings:

server:

scheme: https

private-root-certificate: false # true = support SSL Certificates signed by self / private Root Certificates

port: 8090 # internal server port

hostname: api.octoperf.com

public:

port: 443 # publicly exposed port

The server port is configurable via:

server.port: it defines the internal server port running inside theenterprise-editioncontainer,server.public.port: it defines the port advertised to agents and JMeter containers. If not defined, it falls back toserver.portsetting.

Info

server.public.port setting is useful when the backend is behind a load-balancer. Typically load balancers run on port 80 or 443. For security reasons, the internal server cannot run port <= 1024. That's why we have both settings.

This must be configured to be accessible from all load generators. The JMeter containers launched on agent hosts by OctoPerf EE needs to be able to access to the backend through this hostname. The configuration above shows our Saas server configuration.

Warning

Never use localhost because it designates the container itself. This hostname must be accessible from the agents/users perspective.

Server Port¶

As the backend is behind Nginx by default, changing the default port (which is 80) requires a few steps. Let's say you want to run the server on port 443:

- In

nginx/default.conf, locatelisten 80;and change it to443, - In

docker-compose.yml, locate80:80and replace by443:443, - Set

server.public.portenvironment setting to443, - Stop and destroy containers by running

docker-compose down, - Restart containers by running

docker-compose --build -d.

The Nginx frontend port must always be the same as backend server port because the backend advertises this port to docker agents (to communicate).

Warning

After changing the port, you will have to reinstall all agents, otherwise they will fail to connect to the backend.

Internal Proxies¶

When the backend runs inside a Kubernetes cluster behind an ingress controller, you need to specify server.tomcat.remoteip.internal-proxies with the IPs of both internal ingress controllers (usually private ips like 10.42.xx.xx) and your ingress server public IPs.

Without this configuration, the remote IP of the users and agents performing requests to the backend server are incorrectly detected.

Here is an example configuration:

server:

shutdown: graceful # Finish Handling current requests before shutting down

forward-headers-strategy: native # Use X-Forwarded-For HTTP header to resolve remote IPs

tomcat:

remoteip:

internal-proxies: "10\\.\\d{1,3}\\.\\d{1,3}\\.\\d{1,3}" # Regexp: Ignore internal IPs like 10.xx.xx.xx, resolve remote IP instead

For an exhaustive list of available properties, see Common Application Properties.

Elasticsearch¶

General Settings¶

The backend connects to Elasticsearch database using elasticsearch.hostname setting:

elasticsearch:

hostname: elasticsearch

username: elastic # default username is "elastic"

password: # empty by default; if none provided, no authentication is used

client:

buffer-limit: 104857600 # 100Mb by default

indices:

prefix: octoperf_

scroll:

size: 256

keep-alive-min: 15

Elasticsearch can be configured with:

- hostname: This must be configured to an IP or a hostname accessible from the OctoPerf EE container. Multiple hosts can be defined separated by comas,

- username: username to authenticate with Elasticsearch if minimal security is enabled.

elasticis the default username, - password: password to authenticate with Elasticsearch. If empty, no authentication is used,

-

client:

- buffer-limit: maximum response size the client can handle,

-

indices:

- prefix:

octoperf_by default, indices are created withoctoperf_prefix. Example:octoperf_userindice contains the user accounts. - alias: none by default. Indices can be created with the given

alias. This setting is useful to point to use aliases to upgrade a database with no service interruption. Make sure that existing indices already have same aliases configured before editing this setting (otherwise those settings won't be found by the backend).

- prefix:

-

scroll:

- size: scroll window size,

256elements by default, - keep-alive-min: scroll keep alive timeout between each window,

15by default. In minutes.

- size: scroll window size,

Info

Never use localhost hostname. Use the IP address of the machine hosting your database, even if it's on the same machine.

Snapshots Settings¶

Snapshots are the equivalent of an incremental backup of all the database indices. The backend can trigger an elasticsearch snapshot creation every night with the configuration below:

elasticsearch:

snapshots:

enabled: true

repository: nfs-server # name of the repository to backup

keep-last: 7 # 7 days rolling window

Tip

Follow this blog post to store ElasticSearch backups on a shared NFS.

Container Orchestrator¶

Both docker (by default) and podman are supported:

octoperf:

agent:

orchestrator: docker # or podman

It affects the command-line being generated to start the agent.

SMTP Server¶

an SMTP mail server can be specified in order to support user account password recovery:

spring:

mail:

enabled: true

host: smtp.mycompany.com

port: 587

username: username@mycompany.com

password: passw0rd

properties:

mail.smtp.socketFactory.class: javax.net.ssl.SSLSocketFactory

mail.smtp.auth: true

mail.smtp.starttls.enable: true

mail.smtp.starttls.required: true

from:

name: MyCompany Support

email: from@mycompany.com

For a complete list of supported properties, see Javax Mail Documentation.

High Availability¶

Prerequisites:

- You need to have at least 3 Hosts in a cluster with networking enabled between them.

To enable high-availability, the different backend servers need to form a cluster. Each backend server must be able to communicate with others. To enable HA using Hazelcast, define the following configuration:

clustering:

driver: hazelcast

members: enterprise-edition

quorum: 2

It takes the following configuration:

- driver: either

noop(no clustering),hazelcast(using Hazelcast) orignite(using Apache Ignite), - members: comma separated list of hostnames or IPs of the members of the cluster,

- quorum: minimum number of nodes necessary to operate.

quorum = (n+1) / 2, wherenis the total number of nodes in the cluster.

3 Containers Setup¶

Let's describe what's being configured here:

- driver: hazelcast indicates to use Hazelcast clustering, Hazelcast is a Distributed Java Framework we use internally to achieve HA,

- hazelcast.members: hostnames of all the backends separated by comas,

- hazelcast.quorum: The quorum indicates the minimum number of machines within the cluster to be operational. quorum = (n + 1) / 2, where n is the number of members within the cluster.

hazelcast.members can be either a DNS hostname pointing to multiple IPs or multiple IPs separated by comas.

Resources Storage¶

Resources like recorded requests, recorded responses or files attached to a project are stored inside the container in folder /home/octoperf/data:

storage:

quota:

max-file-count: 1024 # max number of files per project / result

max-total-size: 1047741824 # max total bytes per project / result

driver: fs

fs:

folder: data

When no Docker volume mapping is configured, the data is lost when the container is destroyed. In order to avoid this, you need to setup a volume mapping like for the configuration. Example: /home/ubuntu/docker/enterprise-edition/data:/home/octoperf/data

When running the backend in HA, it's not possible to store data on the local disk. The request being sent to get resources may hit any backend server, but the wanted resource may be stored on another server. In this case, it's better to store resources on a global service shared by all backends like Amazon S3.

Configuring Amazon S3 Storage

storage:

driver: s3

s3:

region: eu-west-1

bucket: my-bucket

access-key:

secret-key:

Let's describe what is being configured here:

- driver: Amazon S3 driver,

-

s3:

- region: specifies the region where the target S3 bucket is located,

- bucket: S3 bucket name, usually my-bucket.mycompany.com,

- access-key: an AWS access key with access granted to the given bucket,

- secret-key: an AWS secret key associated to the access key.

We suggest to setup an Amazon User with permission granted only to the target S3 bucket. Here is an example Policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "Stmt1427454857000",

"Effect": "Allow",

"Action": [

"s3:Get*",

"s3:List*",

"s3:Put*",

"s3:Delete*"

],

"Resource": [

"arn:aws:s3:::bucket.mycompany.com",

"arn:aws:s3:::bucket.mycompany.com/*"

]

}

]

}

User Management¶

User management can be customised using the following configuration:

users:

administrators: admin@octoperf.com # coma separated list of usernames

registration:

enabled: true # default: true. Are new users allowed to create an account?

welcome-email:

enabled: true # default: true (available in version >= 15.0.1)

email-activation:

enabled: false # default: false. Should users receive an email to activate their account?

disposable-emails:

block-known-domains: true # default: false.

custom-domains: mydomain.com # default: none. custom user-defined email domains to block

password-recovery:

enabled: true # default: true. Can users recover their account password by email?

explicit-login:

enabled: true # default: true. Can users login using the regular login form?

Email activation requires a valid email configuration (otherwise users won't receive the activation email).

Data Eviction¶

Unnecessary data like old results or orphaned files can be automatically deleted using the following settings:

octoperf:

eviction:

audit-logs:

enabled: true # false by default

months: 24

test-results:

enabled: true # false by default

months: 24

orphaned-project-blob: # orphaned project files

enabled: true # false by default

By default, evicting data is disabled.

Runtime settings¶

Several runtime settings can be configured:

octoperf:

runtime:

abort-stale-test:

timeout-min: 20 # Duration in min before any unresponsive test is killed

check-vu:

max-iterations: 5 # Maximum number of iterations allowed for a check VU

Single Sign-On¶

OpenID Connect (Oauth2)¶

OctoPerf EE supports Oauth2 / OpenID Connect authentication through Spring Boot properties. Here is an example application.yml:

spring:

security:

oauth2:

client:

registration:

okta:

client-id: okta-client-id

client-name: okta

client-secret: okta-client-secret

allowed-domains: "mydomain.com, myotherdomain.com" # (Optional) restrict domains allowed to login. If empty, all are accepted

username-suffix: "" # (Optional) Example: @mydomain.com if the username is not a valid email.

name: "Okta" # (Optional)

description: "Login With Okta" # (Optional)

scope: openid,email # oauth2 scopes

client-authentication-method: client_secret_basic

authorization-grant-type: authorization_code

redirect-uri: "{baseUrl}/{action}/oauth2/code/{registrationId}" # DO NOT MODIFY THIS SETTING

provider:

okta:

authorization-uri: https://your-subdomain.oktapreview.com/oauth2/v1/authorize

token-uri: https://your-subdomain.oktapreview.com/oauth2/v1/token

user-info-uri: https://your-subdomain.oktapreview.com/oauth2/v1/userinfo

jwk-set-uri: https://your-subdomain.oktapreview.com/oauth2/v1/keys

user-name-attribute: sub

The Oauth2 provider (here okta) must then be declared in ui configuration config.json to enable Oauth2 login. The user-name-attribute setting must match the username of the user logging in. user-name-attribute must point to an email attribute. If not, please also define username-suffix property.

Only emails are allowed as usernames. In that case, when the user jsmith logs in, it creates an account with username jsmith@mydomain.com.

Info

In order for the login page to be compatible with those changes, you must also edit the config.json file.

Microsoft Azure¶

spring:

security:

oauth2:

client:

registration:

azure:

provider: azure

client-name: Azure

client-id: ${CLIENT_ID} # CLIENT_ID environment variable

client-secret: ${CLIENT_SECRET} # CLIENT_SECRET environment variable

scope: openid, email, profile

provider:

azure:

issuer-uri: https://login.microsoftonline.com/${TENANT_ID}/v2.0 # TENANT_ID environment variable

user-name-attribute: email

When using Microsoft Azure authentication with OpenID Connect, it's important to use at least v2.0 API version endpoints. As stated in their notes and caveats:

We recommend that all OIDC compliant apps and libraries use the v2.0 endpoint to ensure compatibility.

This is needed otherwise you may experience issues retrieving the account email and associating it with an OctoPerf account.

If you plan to configure Azure SSO through the integrated SSO Administration Panel, we suggest to use the following settings.

SSO Registration:

Client ID: your client id,Client Secret: your client secret,Client Name: same as the SSO id, in lowercase without any space,Auth Method:Client Secret Basic,Auth Grant Type:Authorization Code,Scopes:openid,email,profile,Allowed domains: email domain name of users registering. Example: mycompany.com,Username suffix: appends@mycompany.comif configured withmycompany.com(in case the username attribute is not a valid email),Redirect URI:{baseUrl}/{action}/oauth2/code/{registrationId}.

SSO Provider: (replace TENANT_ID by your own tenant id provided by Microsoft Azure)

Authorization URI:https://login.microsoftonline.com/TENANT_ID/oauth2/v2.0/authorize,Token URI:https://login.microsoftonline.com/TENANT_ID/oauth2/v2.0/token,User Info URI: `` (leave empty),JWK Set URI:https://login.microsoftonline.com/TENANT_ID/discovery/v2.0/keys,Issuer URI:https://login.microsoftonline.com/TENANT_ID/v2.0,Username Attribute:preferred_username(SSO attribute which maps the user email).

Info

In order for the login page to be compatible with those changes, you must also edit the UI configuration file.

LDAP¶

By default, OctoPerf EE creates and manages users inside its own database.

OctoPerf EE supports seamsless integration with a third party authentication server (Single Sign On, aka SSO) based on the LDAP protocol.

Info

It's strongly recommended to be assisted by your System Administrator when configuring OctoPerf to support LDAP authentication.

Configuring LDAP Authentication

users:

driver: ldap

ldap:

# ldap or ldaps

protocol: ldap

hostname: localhost

port: 389

base: dc=example,dc=com

principal-suffix: "@ldap.forumsys.com"

authentication:

# anonymous, simple, default-tls, digest-md5 or external-tls

method: simple

username: cn=read-only-admin,dc=example,dc=com

password: password

# Cache Ldap users

cache:

enabled: true

durationSec: 300

# user password encryption

# OpenLDAP: leave as plain (even if encoded within Ldap)

password:

type: plain

# sha, ssha, sha-256, md5 etc.

algorithm: sha

# LDAP Connection pooling

pooled: false

# User mapping

object-class: person

attributes:

id: uid

name: cn

mail: mail

# Ignorable errors

ignore:

partial-result: false

name-not-found: false

size-limit-exceeded: true

# Filters that will be added to the LDAP query as "key=value AND key2=value2"

query:

filters:

memberOf: CN=groupname,OU=ApplicationGroupes,OU=Users,DC=domain,DC=com

Info

The configuration above shows the default configuration used by the OctoPerf server when ldap driver is configured.

The LDAP authentication supports a variety of settings:

- protocol: either ldap or ldaps,

- hostname: hostname of the LDAP server,

- port: 389 by default, LDAP server connection port,

- base: an LDAP query defines the directory tree starting point,

-

principal-suffix: Empty by default. Example:

@ldap.forumsys.com. Suffix which completes the User name to authenticate with the LDAP server, -

authentication:

- method: (authentication method to use. Can be

anonymous,simple,default-tls,digest-md5orexternal-tls. The last one requires additional JVM configuration. - username: depending on the authentication method. Must be a valid Ldap Distinguished Name (DN),

- password: depending on the authentication method. Password associated to the given username,

- method: (authentication method to use. Can be

-

cache:

- enabled:

trueby default. Enables User cache to reduce the load on the LDAP server, - durationSec:

300sec by default. Any change on the LDAP server takes up to this duration to be taken into account,

- enabled:

-

password: Defines how the user password should be encrypted,

- type:

plainby default. Sethashand refer toalgorithmto set the right hashing algorithm, - algorithm:

shaby default, required iftypeis set tohash. Supports:sha,sha-256,sha-384,sha-512,ssha,ssha-256,ssha-384,ssha-512,md5,smd5,crypt,crypt-md5,crypt-sha-256,crpyt-sha-512,crypt-bcryptandpkcs5s2.

- type:

-

pooled: whenever to use a connection pool to connect to the LDAP server. Pooling speeds up the connection,

-

object-class: objectClass defines the class attribute of the users. Users are filtered based on this value,

-

attributes:

- id:

required. Maps the given attribute (uid by default) to the user id, - name:

optional. Maps the given attribute (cn by default) to the user firstname and lastname, - mail:

optional. Maps the given attribute (mail by default) to the user mail. If empty, it uses theidconcatenated with theprincipal-suffix.

- id:

-

ignore:

- partial-result:

optional. Set totrueto ignorePartialResultException. AD servers typically have a problem with referrals. Normally a referral should be followed automatically, but this does not seem to work with AD servers. The problem manifests itself with aPartialResultExceptionbeing thrown when a referral is encountered by the server. Setting this property totruepresents a workaround to this problem, - name-not-found: Specify whether

NameNotFoundExceptionshould be ignored in searches. In previous version,NameNotFoundExceptioncaused by the search base not being found was silently ignored, - size-limit-exceeded: Specify whether

SizeLimitExceededExceptionshould be ignored in searches. This is typically what you want if you specify count limit in your search controls.

- partial-result:

Warning

Choosing between Internal and LDAP authentication must be done as soon as possible. Existing internal users won't match LDAP ones if changed later, even if they share the same username.

When using LDAP authentication, a few features related to user management are disabled or non-functional:

- changing or resetting a user password,

- editing user profile information,

- and registering a new user through the registration form.

Note

You should configure the UI config.json file using LDAP login settings.

Virtual User Validation Storage¶

Virtual user validation http requests and responses are stored within Elasticsearch by default.

validation:

driver: elasticsearch # or blob, which means on disk

retention-days: 1 # how much time validation data should be kept

Validation http requests can either be stored in blob storage (with validation.driver: blob; see Resources Storage section), or within the database (with validation.driver: elasticsearch).

Errors storage¶

The Error table keeps track of only two errors of each kind. It also has some max limits per JMeter container and test result that can be configured:

octoperf:

runtime:

errors:

max-per-test: 500 # max errors per test result,

max-per-instance: 100 # max errors per jmeter container / instance,

max-per-request: 2 # max identical errors/code per request.

Warning

Increasing these values can have adverse effect on the storage size required. It can also prevent your reports to load if you try to display too many errors per report.

Nightly Cleanup Batches¶

Some internal batch tasks (disabled by default) can be run every night to reclaim unnecessary disk space being used. Those batch jobs can be enabled using the following properties:

# Available in version >= 12.0.3

octoperf:

eviction:

# scans results to keeps only the first 500 error requests / responses

bench-error-blob:

enabled: false

# scans virtual users modified within last 2 days to removed orphaned recorded requests / responses

orphaned-http-recorded:

enabled: false

# Removed recorded requests / responses of virtual users with 12 <= lastModified <= 24 months

http-recorded:

enabled: false

min-months: 12

max-months: 24

# scans projects storage folder and removes them if the project doesn't exist anymore

orphaned-project-blob:

enabled: false

# scans results storage folder and removes them if the result doesn't exist anymore

orphaned-result-blob:

enabled: false

When upgrading from version 12.0.2 and below, we suggest to temporarly enable those batches to clean previously undeleted files. Those batches run during the night.

reCAPTCHA¶

Google's reCAPTCHA can be used to prevents bots from creating accounts on your OctoPerf platform.

recaptcha:

enabled: true

endpoint: https://www.google.com

private.key: your-private-key

It takes the following configuration:

- enabled:

trueto activate Recaptcha, - endpoint:

https://www.google.comto use Google's recaptcha service, - private.key: your reCAPTCHA private key (obtained in the Google's reCAPTCHA admin console).

Warning

The public reCAPTCHA key must be configured in the config.json file of the frontend, using the json key "captcha": "you-public-key".

Please have a look at the GUI / Frontend configuration section to know how to map the configuration file.

JMeter Plugins¶

By default, JMeter plugins are not downloaded. This feature can be configured as following:

jmeter:

plugins:

download-from: NONE

JMeter plugins are downloaded from:

SERVER: OctoPerf server downloads them when importing the JMX. The plugin files are available within project files,JMETER: PluginsManagerCMD is executed just before running the test withinstall-for-jmxargument,NONE: plugins are not downloaded at all.

File Upload Limit¶

The default file upload limit is set to 200MB.

spring:

servlet.multipart:

max-request-size: 200MB

max-file-size: 200MB

This example sets the limit to 200MB.

Notifications¶

Tests can be marked as failed when the error percentage is higher than a certain value.

octoperf:

notifications:

max-errors-percent: 5

You can configure system-wide notifications to be sent by your OctoPerf server:

octoperf:

notifications:

enabled: true

slack:

- channel: user-activity

token: XXX

eventIds:

- CHANGE_USERNAME

- CLOUD_HOST_ISSUE

- JMX_IMPORT

- TEST_ENDED

- TEST_ERROR

- TEST_FAILED

- TEST_PASSED

- TEST_STATUS

- TEST_STARTED

- USER_ACTIVATED

- USER_LOGIN

- USER_LOGOUT

- USER_PASSWORD_CHANGED

- USER_REGISTERED

Amazon Instance Tags¶

Aws Instances created by OctoPerf can be tagged with the following settings:

octoperf:

amazon:

tags:

key: value

key2: value2

Those tags are applied to all Amazon providers configured on the platform.

UI configuration¶

The file config.json lets you configure the UI of OctoPerf. An example of this configuration file:

{

"version": "octoperf-1",

"backendApiUrl": "https://api.octoperf.com",

"docUrl": "https://api.octoperf.com/doc",

"adminEmail": "support@octoperf.com",

"login": {

"@type": "form",

"register": true,

"activate": true,

"forgotPassword": true

},

"notice": {

"text": "BETA version: classic UI is available at [https://api.octoperf.com/app](https://api.octoperf.com/app).",

"height": 20,

"color": "white",

"backgroundColor": "#171e29"

},

"twitter": true,

"cloud": true,

"recaptcha": "recaptcha-key"

}

This file supports a variety of settings:

- version: the frontend application version used to store data in the browser local storage (changing this version resets all stored local preferences for every user),

- backendApiUrl: the URL of the backend server, i.e.

https://api.octoperf.comfor OctoPerf SaaS, - docUrl: the URL of the documentation, i.e.

https://api.octoperf.com/docfor OctoPerf's public documentation, - adminEmail: the email address the users may contact for Administrator operations such as removing a Workspace,

- login: see configuration bellow,

- notice: replaces the application footer with a custom message (

heightis in pixels andtextis markdown formatted), - twitter: [true/false] displays a Twitter feed on the right side of the application,

- cloud: [true/false] activates a tab in the Administration page that lists all currently started Cloud instances,

- recaptcha: "reCAPTCHA_public_key" your reCAPTCHA public key, see backend's configuration.

You can map a docker volume to this file /usr/share/nginx/html/ui/assets/config.json to customize its content, and for example, update the administrator email address.

This must be done in the docker-compose.yml file. Simply edit the enterprise-ui service to map the file:

enterprise-ui:

volumes:

- /path/to/config.json:/usr/share/nginx/html/ui/assets/config.json

UI Login configuration¶

UI Form login configuration¶

This is the most basic mode of login configuration used to connect to the backend server. We assume here that the backend manages user on its own.

{

"login": {

"@type": "form",

"register": true,

"activate": true,

"forgotPassword": true

}

}

- @type: the type of login configuration, must be set to

"form", - register: [true/false] register page availability,

- register: [true/false] activate page availability,

- forgotPassword: [true/false] forgot password page availability.

UI OAuth login configuration¶

The page http://FRONTEND_URL/#/access/connect/{clientId} is automatically generated based on the oauth2 settings defined server-side in application.yml.

{

"login": {

"@type": "oauth",

"id": "clientId"

}

}

The supported settings are:

- @type: the type of login configuration, must be set to

"oauth", - id: The Oauth client id,

oktain the server-side configuration example.

UI LDAP login configuration¶

If using the server-side #ldap configuration you can set the frontend configuration to:

{

"login": {

"@type": "ldap"

}

}

This will disable register, activate, and forgot password pages.

The supported settings is:

- @type: the type of login configuration, must be set to

"ldap".

Troubleshooting¶

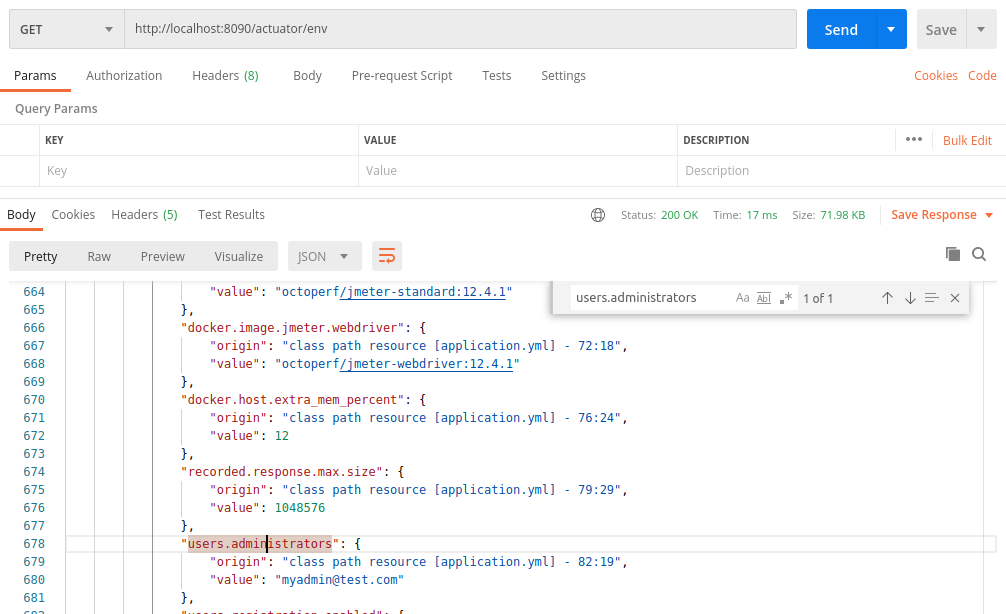

It is possible to ask the OctoPerf enterprise-edition backend to expose the value of all the properties. This is useful for debugging purpose but make sure to disable it once you're done or you'll be publicly exposing critical security information.

To do this, use the following property:

management:

endpoints:

web:

exposure:

include: "*"

After restarting the enterprise edition server, you will be able to access all the environment settings via http://<baseURL>/actuator/env.

Warning

This should only be activated for debugging purpose since with this setting critical security information is publicly exposed.