Deploy OctoPerf On-Premise Infra on Kubernetes¶

This tutorial explains how to setup OctoPerf On-Premise Infra on a Kubernetes cluster using OctoPerf Helm Chart.

Prerequisites¶

This tutorial requires:

- Know what a

Makefileis, and what it's being used for, - Know what Docker is and what it's being used for,

- Basic understand Kubernetes concepts (like pods, deployments, replicatset it's made of)

- Knowledge about how to use

kubectlcommand to interact with your Kubernetes (k8s) cluster, - Minikube installed on your computer, (which itself requires VirtualBox)

- Helm install. Helm allows to install Helm Charts on your K8s cluster,

- a local computer running Linux, preferrably Ubuntu LTS, with at least

4CPUS and16GBRAM.

The next chapters of this tutorial suppose you are familiar with all the technologies listed above.

Overview¶

The tutorials helps you to:

- Setup a virtual machine with k8s preinstalled using Minikube,

- Install the tools required to interact with the k8s cluster previously setup,

- Install OctoPerf On Premise Infra Helm Chart on your local k8s cluster.

Getting Started¶

To get started, you must:

- Install VirtualBox,

- Install Kubectl command-line tool,

- Install Minikube,

- Install Helm.

Make sure to start Minikube with at least 2 CPUs and 8GB RAM. By default, minikube is configured to use only 1 CPU with 1024MB RAM. It won't be enough to run OctoPerf On Premise Infra. Minikube automatically configures kubectl to interact with the k8s cluster inside the virtual machine. The VM should have the following private IP: 192.168.99.100.

Minikube Setup¶

Start a new virtual machine using minikube. It may take a few minutes before the virtual machine is up and running. You should see the following message once the virtual machine is started:

ubuntu@laptop:~$ minikube start --cpus 4 --memory 8192

😄 minikube v1.2.0 on linux (amd64)

💡 Tip: Use 'minikube start -p <name>' to create a new cluster, or 'minikube delete' to delete this one.

🔄 Restarting existing virtualbox VM for "minikube" ...

⌛ Waiting for SSH access ...

🐳 Configuring environment for Kubernetes v1.15.0 on Docker 18.09.6

🔄 Relaunching Kubernetes v1.15.0 using kubeadm ...

⌛ Verifying: apiserver proxy etcd scheduler controller dns

🏄 Done! kubectl is now configured to use "minikube"

You can now check the cluster is running by getting nodes:

ubuntu@laptop:~$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

minikube Ready master 1m v1.15.0

We now have a workin Kubernetes cluster running inside a virtual machine in Virtualbox. The next step is to configure helm.

Helm Setup¶

Helm is a package manager for Kubernetes. Thanks to our Helm Chart, you can easily install and run OctoPerf On Premise Infra on any k8s cluster.

First, let's add OctoPerf's Helm repository. By default, Helm is configured with a single repository:

ubuntu@laptop:~$ helm repo list

NAME URL

stable https://kubernetes-charts.storage.googleapis.com

Let's add our own repository now:

ubuntu@laptop:~$ helm repo add octoperf https://helm.octoperf.com

"octoperf" has been added to your repositories

ubuntu@laptop:~$ helm repo list

NAME URL

stable https://kubernetes-charts.storage.googleapis.com

octoperf https://helm.octoperf.com

The OctoPerf Helm chart repository is now added to your Helm repository. Let's refresh the repositories and check the available charts:

ubuntu@laptop:~$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "octoperf" chart repository

...Successfully got an update from the "stable" chart repository

Update Complete.

ubuntu@laptop:~$ helm search octoperf

NAME CHART VERSION APP VERSION DESCRIPTION

octoperf/enterprise-edition 11.0.0 11.0.0 Official OctoPerf Helm Chart for Enterprise-Edition

Our Helm command is now aware of OctoPerf On Premise Infra helm chart. To make things simple, a helm chart is a special kind of software installer dedicated to Kubernetes. We're now ready to install the chart on our minikube virtual machine.

Helm Chart Configuration¶

First, we need to create a values.yaml configuration file to adjust a few settings. Let's download the values.yaml for the examples we provide online:

ubuntu@laptop:~$ wget https://raw.githubusercontent.com/OctoPerf/helm-charts/master/enterprise-edition/examples/minikube/values.yaml

--2019-07-04 17:15:16-- https://raw.githubusercontent.com/OctoPerf/helm-charts/master/enterprise-edition/examples/minikube/values.yaml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 151.101.120.133

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|151.101.120.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 763 [text/plain]

Saving to: ‘values.yaml’

values.yaml 100%[=====================================================================================================>] 763 --.-KB/s in 0s

2019-07-04 17:15:16 (121 MB/s) - ‘values.yaml’ saved [763/763]

Let's take a look at what's inside:

---

elasticsearch:

# Permit co-located instances for solitary minikube virtual machines.

antiAffinity: "soft"

# Shrink default JVM heap.

esJavaOpts: "-Xmx128m -Xms128m"

# Request smaller persistent volumes.

volumeClaimTemplate:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "standard"

resources:

requests:

storage: 1Gi

ingress:

enabled: true

annotations: {}

path: /

hosts:

- "192.168.99.100.nip.io"

backend:

env:

server.hostname: "192.168.99.100.nip.io"

Basically, this configuration file configures:

- A 3 nodes Elasticsearch cluster, all 3 of them running on the same node (minikube VM),

- The On Premise Infra stack which includes the backend, frontend and documentation servers,

- And exposes the relevant services using an ingress load-balancer on host

192.168.99.100.nip.io.

Info

nip.io is a convenient DNS service for development purpose to have DNS records for private IPs.

Helm Chart Setup¶

To make subsequent command-lines easier to run, let's create a very simple Makefile:

default: dry-run

NAMESPACE=octoperf

RELEASE ?= enterprise-edition

HELM_PARAMS=-f values.yaml --name $(RELEASE) --namespace $(NAMESPACE)

dry-run:

helm install $(HELM_PARAMS) --dry-run

install:

helm install octoperf/enterprise-edition $(HELM_PARAMS)

get-all:

kubectl -n octoperf get all

purge:

helm del --purge $(RELEASE)

clean:

-helm delete $(RELEASE)

-helm del --purge $(RELEASE)

That way, we can easily install the helm chart on minikube by typing make install:

ubuntu@laptop:~/kubernetes$ make install

helm install octoperf/enterprise-edition -f values.yaml --name enterprise-edition --namespace octoperf

NAME: enterprise-edition

NAMESPACE: octoperf

STATUS: DEPLOYED

...

NOTES:

Thank you for installing OctoPerf enterprise-edition! Your release is named enterprise-edition.

To learn more about the release, try:

$ helm status enterprise-edition

$ helm get enterprise-edition

A whole bunch of k8s resources of different kinds (ConfigMap, DaemonSet, Service, StatefulSet etc.) are being created at once. To get the status of the helm enterprise-edition release, run helm get enterprise-edition in your terminal:

RESOURCES:

==> v1/ConfigMap

NAME DATA AGE

enterprise-edition-backend-config 6 3m22s

enterprise-edition-frontend-config 1 3m22s

==> v1/DaemonSet

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

enterprise-edition-doc 1 1 1 1 1 <none> 3m22s

enterprise-edition-frontend 1 1 1 1 1 <none> 3m22s

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

elasticsearch-master-0 2/2 Running 0 3m22s

elasticsearch-master-1 2/2 Running 0 3m22s

elasticsearch-master-2 2/2 Running 0 3m22s

enterprise-edition-backend-0 1/1 Running 0 3m22s

enterprise-edition-doc-2kw5z 1/1 Running 0 3m22s

enterprise-edition-frontend-5kdqj 1/1 Running 0 3m22s

==> v1/Service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

elasticsearch-master ClusterIP 10.99.170.60 <none> 9200/TCP,9300/TCP 3m22s

elasticsearch-master-headless ClusterIP None <none> 9200/TCP,9300/TCP 3m22s

enterprise-edition-backend ClusterIP 10.100.187.186 <none> 8090/TCP 3m22s

enterprise-edition-backend-headless ClusterIP None <none> 8090/TCP,5701/TCP 3m22s

enterprise-edition-doc ClusterIP None <none> 80/TCP 3m22s

enterprise-edition-frontend ClusterIP None <none> 80/TCP 3m22s

==> v1/StatefulSet

NAME READY AGE

enterprise-edition-backend 1/1 3m22s

==> v1beta1/Ingress

NAME AGE

enterprise-edition-ingress 3m22s

==> v1beta1/PodDisruptionBudget

NAME MIN AVAILABLE MAX UNAVAILABLE ALLOWED DISRUPTIONS AGE

elasticsearch-master-pdb N/A 1 1 3m22s

enterprise-edition-backend-pdb N/A 1 1 3m22s

==> v1beta1/StatefulSet

NAME READY AGE

elasticsearch-master 3/3 3m22s

It may take a few minutes / dozen of minutes (depending on your internet speed) to get everything up and running. Remember about a dozen docker images of various sizes are required to run OctoPerf Enterprise-Edition (along with Elasticsearch) on k8s.

Info

The configuration used in this tutorial is suitable for testing purpose on minikube only. Please see the helm chart documentation for a comprehensive list of all possible settings.

Web UI¶

Now, open 192.168.99.100.nip.io in your web browser. Should you see a HTTP 503 Service Unavailable message, please wait until all resources are up and running. You can check the status of the various resources using the terminal:

ubuntu@laptop:~/kubernetes$ kubectl -n octoperf get pods

NAME READY STATUS RESTARTS AGE

elasticsearch-master-0 2/2 Running 0 6m37s

elasticsearch-master-1 2/2 Running 0 6m37s

elasticsearch-master-2 2/2 Running 0 6m37s

enterprise-edition-backend-0 1/1 Running 0 6m37s

enterprise-edition-doc-2kw5z 1/1 Running 0 6m37s

enterprise-edition-frontend-5kdqj 1/1 Running 0 6m37s

Info

In the case 192.168.99.100.nip.io host is not responding (may happen depending on your ISP), edit your /etc/hosts file and configure it manually by adding the following entry:

192.168.99.100 192.168.99.100.nip.io

Create an Account¶

OctoPerf EE comes completely empty. You need to create an account and register a load generator (computer used to generate the load) to be able to run load tests:

- Signup and create a new account,

- Create an On-Premise Provider: providers are groups of load generators,

- And register an On-Premise Agent within the previously created On-Premise Provider.

To easily register an agent for testing purpose:

- Follow steps above to create an account with an on-premise provider,

- Get the generated agent command-line from the Web UI,

- SSH into minikube VM:

ubuntu@laptop:~/kubernetes$ minikube ssh

_ _

_ _ ( ) ( )

___ ___ (_) ___ (_)| |/') _ _ | |_ __

/' _ ` _ `\| |/' _ `\| || , < ( ) ( )| '_`\ /'__`\

| ( ) ( ) || || ( ) || || |\`\ | (_) || |_) )( ___/

(_) (_) (_)(_)(_) (_)(_)(_) (_)`\___/'(_,__/'`\____)

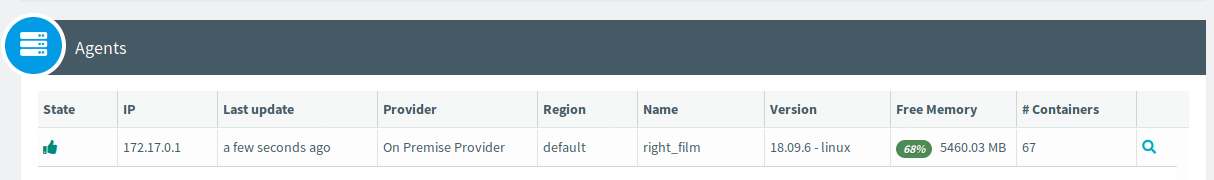

$ sudo docker run -d --restart=unless-stopped --name right_film -v /var/run/docker.sock:/var/run/docker.sock -e AGENT_TOKEN='XXXXXXXXXX' -e SERVER_URL='http://192.168.99.100.nip.io:80' octoperf/docker-agent:11.0.0

- Copy and paste the agent command-line directly here. The OctoPerf docker-agent will run directly inside the virtual machine. It should show up in Web UI Agents table after a while.

Agent running inside Minikube VM appears with a private IP specific to Docker bridge network.

Agent running inside Minikube VM appears with a private IP specific to Docker bridge network.

Congratulations! You've just managed to run OctoPerf Enterprise-Edition on Kubernetes using Minikube on your own computer.